You've probably heard of AI-generated images. Over the past few years, they've exploded in popularity, from surreal landscapes to hyper-realistic portraits. The results from programs like MidJourney, DALL-E, or Stable Diffusion are undoubtedly impressive. However, when you take a closer look, you'll quickly notice that this technology has its pitfalls: fundamental weaknesses and frustrating limitations that make life as a content creator much more difficult.

A Random Generator Instead of Creativity

The biggest weakness is obvious: AI-generated images are essentially nothing more than a highly sophisticated random generator. Although the algorithms are trained on massive datasets to imitate styles and motifs, the final output is ultimately based on probabilities. Even if you use the same prompt multiple times, it's nearly impossible to get two identical images. This lack of reproducibility is not only frustrating but also problematic if you need consistent results as a content creator.

Imagine you're a graphic designer and want to create a series of images in the same style and with the same motif. With AI tools, at best, you can hope that the results look somewhat similar. This unpredictability renders the technology almost useless in many professional applications.

Not a Tool for Content Creators

Another issue is the lack of control over the final result. While traditional image editing software like Photoshop or GIMP allows you to make precise adjustments, AI-generated images often leave you relying on luck. Sure, you can refine prompts and tweak parameters, but the process feels more like gambling than a targeted creative effort.

This is where hybrid solutions like Adobe Firefly come into play. With tools like these, you can combine the flexibility of traditional image editing with the advantages of AI assistance. This allows you to create or enhance specific parts of an image without giving up your creative control.

Overwhelmed by Fake Content

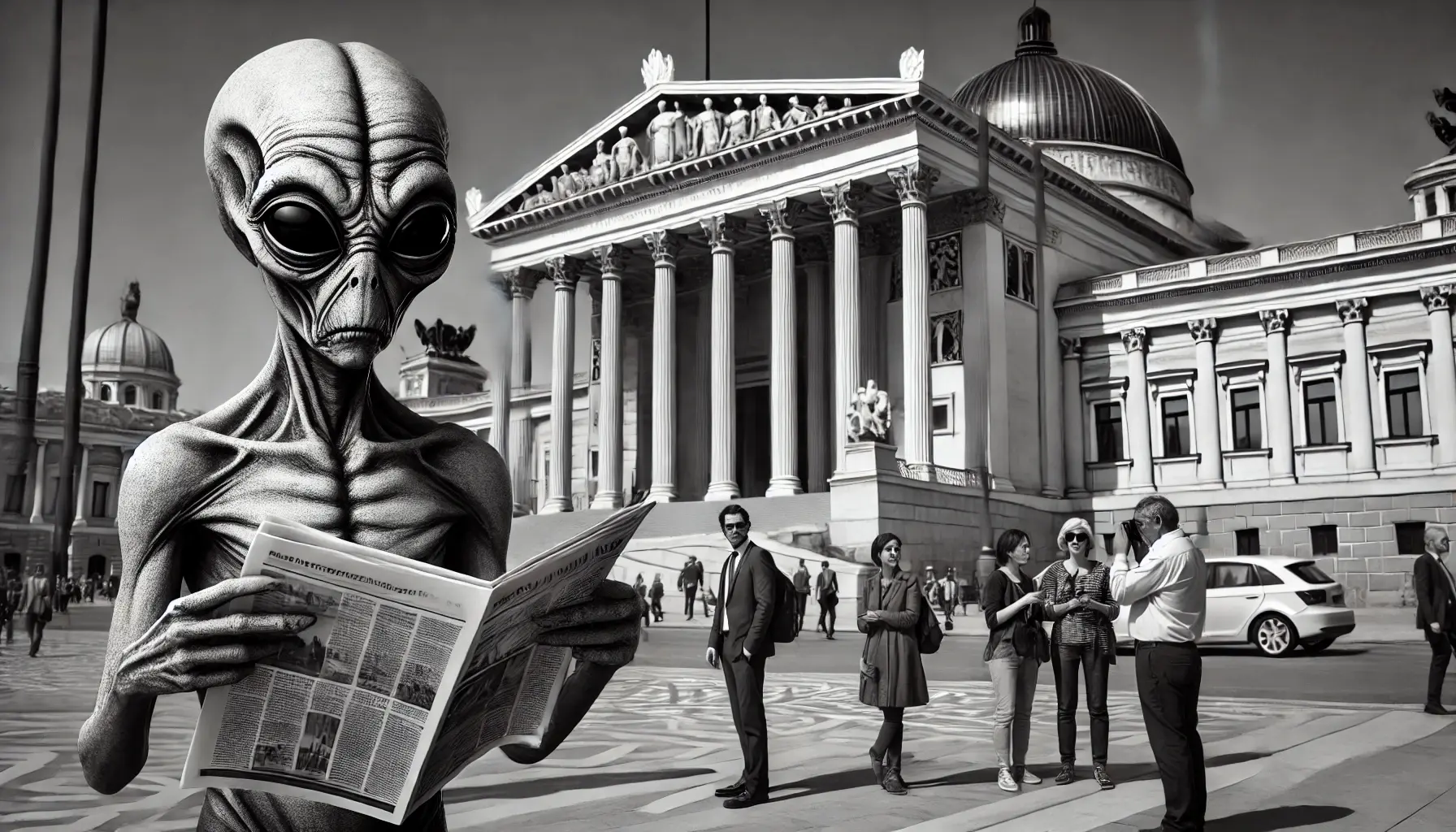

You're likely familiar with this problem as well: social media platforms are flooded with AI-generated images that are often presented as real photographs. These images not only create confusion but are increasingly being used manipulatively to influence political opinions or spread false narratives. This undermines trust in visual media and makes it harder for you to distinguish between genuine and manipulated content.

Something urgently needs to change here. Mandatory labeling of AI-generated images would be an essential step toward ensuring transparency and curbing the spread of fake content. Such measures would not only protect you as a consumer but also promote the responsible use of AI technologies.

Conclusion: Frustration Over Progress

Even though AI-generated images offer impressive possibilities, they are far from being a perfect tool for you as a content creator. The lack of reproducibility, limited control over the final output, and the overwhelming amount of questionable content demonstrate that this technology still has a long way to go.

The most sensible approach for you lies in combining AI with traditional image editing—just as Adobe does with Firefly. Only this way can you leverage the benefits of AI without losing your creative control and authenticity.

It is to be hoped that the industry will focus on solutions in the future that not only impress but also provide genuine value for you. Until then, skepticism remains warranted—and you should pay close attention to what is being sold to you as “real” images on social media.

P.S. The title image was created using DALL-E. On closer inspection, you’ll notice that the alien in front of the Austrian Parliament is indeed an AI-generated image 🙂 I find the result extremely poor. But with more time, it would surely have been possible to generate an image that looked like a real photo.